Visual Intelligence

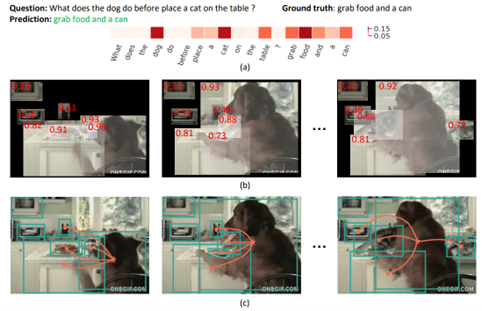

A*STAR I²R creates innovative capabilities and advanced technologies to automate and augment visual intelligence. We aim to achieve human-AI symbiosis through visual analysis, Q&A, augmentation, reasoning, and interactive synthesis. To address practical problems, we develop fast, responsive visual understanding models for real-time interactions. This fosters synergy between human workers and AI applications, augmenting workflow without replacing human involvement.

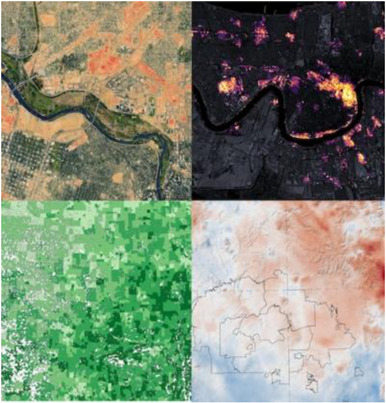

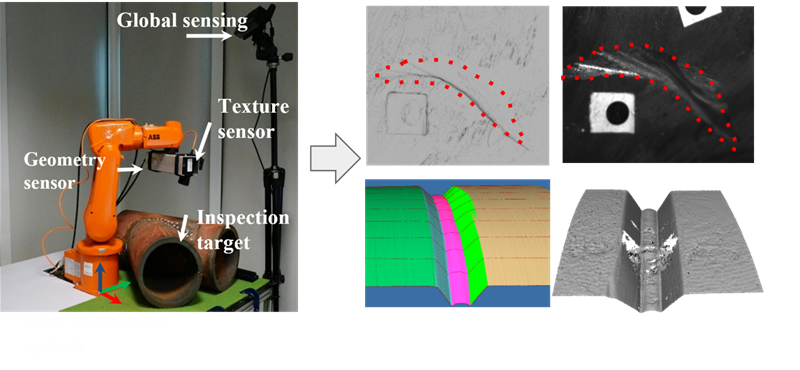

Our research focuses on visual learning and reasoning through continual learning, neuroscience-inspired AI, and controllable image/video generation. We specialise in technologies such as 3D computer vision, image/video and point cloud analysis, and 3D geometry modeling and quantification. Notably, we conduct indoor mapping for building inspection and construction progress tracking and have successfully demonstrated warehouse content mapping and tracking.

We focus on these specific areas of research and development.

A*STAR celebrates International Women's Day

From groundbreaking discoveries to cutting-edge research, our researchers are empowering the next generation of female science, technology, engineering and mathematics (STEM) leaders.

Get inspired by our #WomeninSTEM