IHPC Tech Hub

Discover the power of computational modelling, simulation and AI that brings about positive impact to your business.

- Health & Human Potential

- Manufacturing & Engineering

- Smart Nation & Digital Economy

- Transport & Connectivity

- Urban Solutions & Sustainability

Digital Emotions - Multimodal Affective AI Engine

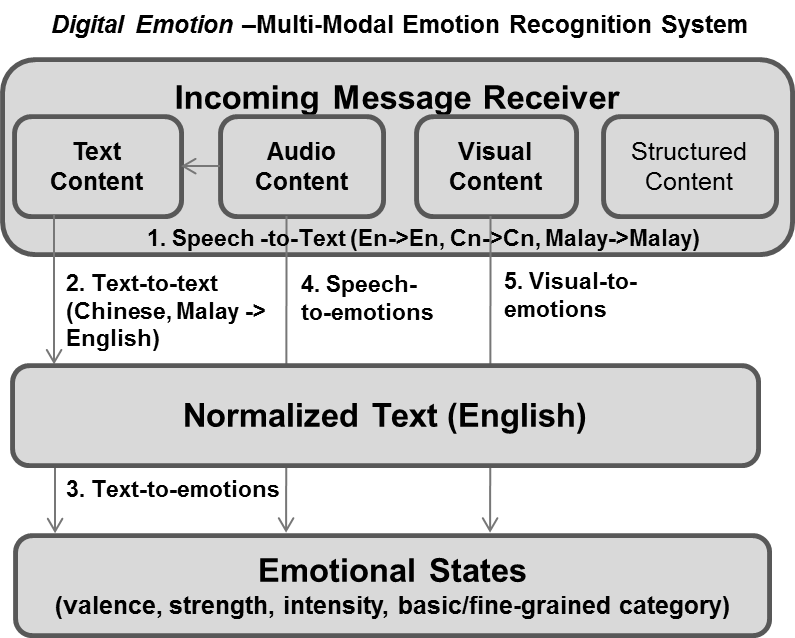

Digital Emotions, or DE in short, is an integrative technology platform featuring a collection of six A*STAR’s best-in-class technologies developed in the fields of visual, acoustic and linguistic emotion analyses, automatic speech recognition, machine translation, natural language understanding and affective computing.

The DE platform is developed through a multi-RI collaborative project spearheaded and supported by A*STAR’s Science and Research Engineering Council, Enterprise and A*ccelerate. The research team comprises scientists and engineers from A*STAR’s Institute of High Performance Computing (IHPC), A*STAR’s Institute for Infocomm Research (I2R), and Advanced Digital Sciences Center (ADSC).

Emotion analysis to date has been dominated by the use and reliance of visual/facial expression-based features, and predominantly considers monolingual speaker language, i.e., English, assuming a western cultural norm and perspective. The key innovation of Project Digital Emotion is centered on the kernel design theory that in real world, emotional communication is a multidimensional, multimodal and dynamic function that varies upon a diverse range of extrinsic and intrinsic contextual factors, including but not limited to the types, characteristics and intensities of emotions, the episodic duration of the emotional expression, as well as the speaker’s language, gender, age and ethnicity. Our research and technological innovation stressing upon 1) a solid theoretical ground on emotions, 2) sociocultural level understanding, and 3) strong foundation on proven pretrained technologies as platform components, significantly differentiate our efforts from existing approaches.

Features

- Able to take unimodal, dual-modal and/or tri-modal data input: Image (.jpg or .png), audio/speech (.wav), text (speech transcript, tweet, comment etc. in .csv) or video (.mp4)

- Produce emotion analysis results predicting the human speaker’s emotional expressions by the emotion types (fear, anger, sadness, joy etc.), emotion dimensions (valence, arousal) as well as emotion intensity (from 0 barely noticeable to 1 extremely intense)

- Reliable pretrained, validated multimodal systems (visual, acoustic & semantic), which are ready to deploy for licensing and/or project use as a combined full system as well as standalone, independent modules

- Speaker language supported: English (ready), Chinese (ready), Malay (preliminary model ready)

- Availability mode: Docker image (preferred), and/or Python SDK

The Science Behind

Project Digital Emotions was spearheaded by A*STAR Science and Engineering Research Council’s Strategic Fund (2017 – 2020). Recognizing the global digital technology trends, local industry demands and seeing opportunities in leveraging A*STAR’s scientific and tech capabilities for new important uses, a team of lead researchers developed a proposal and delivered the Digital Emotion project, entitled “An Integrative Approach to Sociocultural-level Emotion Recognition from Multi-Modal Cues”. The work was further supported by A*ccelerate’s Gap fund (2019 – 2020) which enabled the further development of the technologies for higher TRLs to meet various industry sector specific needs.

The project integrates psychological science capabilities and technological advances and successfully built a next-generation integrative system that is capable of recognizing emotions from visual and non-visual cues. The platform has been developed upon the six cross-domain deeptch capabilities.

- Automatic speech recognition

- Neutral machine translation

- Linguistic emotion analysis

- Acoustic emotion analysis

- Visual/facial emotion analysis

- Multimodal emotion recognition, social intelligence and affective computing

Industry Applications

To date, the Digital Emotion suite of technologies has been adopted, licensed and/or used in projects with more than twelve public and private sector organisations, across media and publishing, consumer care, hospitality and transport, professional services, and public health.

System demo of DE is available upon request.

For more info or collaboration opportunities, please write to enquiry@ihpc.a-star.edu.sg

A*STAR celebrates International Women's Day

From groundbreaking discoveries to cutting-edge research, our researchers are empowering the next generation of female science, technology, engineering and mathematics (STEM) leaders.

Get inspired by our #WomeninSTEM

.png?sfvrsn=ff199933_15)